Table of Contents

Abstract

Abstract: This thesis explores the hypothesis that complexity in the universe tends to increase over time, not only in biological systems but also in nonliving systems. We integrate perspectives from physics, astrobiology, evolutionary biology, and philosophy of science to argue that there is a directional trend—an “arrow of complexity”—complementing the arrow of entropy. We examine functional information as a quantitative measure of complexity and discuss how iterative selection processes, analogous to natural selection, operate in domains beyond biology. We propose that a law of increasing functional information may represent a new fundamental principle, consistent with known physics yet highlighting the role of selection in driving evolution . Sudden “phase transitions” in complexity (e.g. the origin of life, multicellularity, cognition) are analyzed as critical points where new emergent rules and functions redefine what counts as complex. We explore how context and functionality determine complexity, noting that as systems evolve, novel functions and contexts co-evolve. The thesis also delves into the epistemological implications of open-ended, self-referential evolutionary processes, drawing analogies to Gödel’s incompleteness (i.e., no fixed formal system or encompass the unbounded emergence of novelty in evolution). Expanding possibility spaces allow new structures and laws to emerge (“more is different”), suggesting that higher-level complexities are not reducible to initial conditions alone. Finally, we discuss implications for astrobiology and consciousness: if complexity’s increase is universal, life and intelligence may be common outcomes of cosmic evolution, and consciousness might be understood as a high-level manifestation of complex information integration. Keywords: complexity, functional information, selection, emergence, entropy, astrobiology, open-ended evolution, consciousness.

Introduction

The universe has evolved from a hot, uniform primordial state to a cosmos rich with structure: galaxies and stars, diverse chemical elements, planets, and on at least one planet, living organisms capable of reflecting on the cosmos itself. This progression invites a profound question: Does complexity tend to increase over time as a general trend or law of nature? The intuition that there is a progressive buildup of complexity – from quarks to atoms, to molecules, to life, to mind – stands in contrast to the Second Law of Thermodynamics, which dictates an overall increase in entropy (disorder) over time. Yet, we observe that localized pockets of the universe (e.g. Earth’s biosphere) have produced highly ordered, complex systems by dissipating energy to their surroundings, thus obeying the Second Law while carving out pockets of increasing complexity. This apparent paradox between entropy and complexity motivates our investigation.

Background and Hypothesis:

In biological evolution, it has long been debated whether there is an intrinsic drive toward greater complexity. While Darwinian evolution has no predetermined goal, the fossil record shows episodes of increasing organismal complexity (for example, the emergence of multicellular life from unicellular ancestors). Some theorists have proposed that even without adaptive “drive,” a passive tendency exists for complexity to increase over time due to diffusion away from minimal complexity states (McShea’s “Zero-Force Evolutionary Law”). Our hypothesis, however, extends beyond biology: we posit that selection-like processes operating on variations can cause complexity to rise in physical and chemical systems as well. In other words, the same fundamental ingredients – variation, differential persistence, and accumulation of information – may underpin a general complexification of matter and energy in the universe.

Scope and Aims:

This thesis seeks to rigorously explore the idea of a universal trend of increasing complexity. We will examine concepts of functional information (a measure of info (Consciousness, complexity, and evolution - PubMed)chieves specific functions) as a unifying metric for complexity in both living and nonliving systems. We will ask whether there could be a new law of nature analogous to the laws of thermodynamics that encapsulates this complexification tendency. Key themes include the role of selection processes (broadly construed) in driving complexity, the importance of context and function in defining what is complex, and the occurrence of major transitions or phase changes where complexity jumps discontinuously. We will also tackle the philosophical implications of open-ended evolution – in which there is no final “highest” complexity because new possibilities keep emerging – and the limits of formal reducibility of such processes (drawing parallels to Gödel’s incompleteness theorem). Finally, we will discuss what an “arrow of complexity” might mean for predicting the presence of life elsewhere in the universe and for understanding consciousness as a phenomenon of organized complexity.

Structure:

The thesis is organized as follows. In the Literature Review, we survey existing research on complexity in cosmology, biology, and information theory, including attempts to quantify complexity and previous arguments for or against an intrinsic directionality in evolution. The Theoretical Framework chapter defines key concepts – entropy versus complexity, various complexity metrics, functional information, and generalize (How much consciousness is there in complexity? - Frontiers)– that will be used in our analysis. The Core Arguments are presented in several chapters, each tackling one aspect of the central hypothesis: (1) functional information’s role in evolution, (2) the universality of selection processes beyond biology, (3) complexity as a directional arrow of time, (4) a possible new law of complexification in analogy to thermodynamics, (5) the evolving nature of function and context in complexity, (6) sudden jumps in complexity and major transitions, (7) the epistemological implications of self-referential open-ended systems (with Gödelian analogies), and (8) expanding possibility spaces and emergent new rules. We then turn to Implications and Predictions, where we speculate on the future trajectory of complexity on Earth and in the cosmos, and how this framework guides the search for extraterrestrial life and an understanding of consciousness. In Limitations and Open Questions, we critically assess the hypothesis, addressing potential counterarguments (e.g. periods of decreasing complexity, or alternative explanations) and identifying what remains uncertain. Finally, the Conclusion summarizes our findings and suggests directions for future research into the laws of complexity.

By integrating knowledge across disciplinary boundaries, this work aims to synthesize a coherent argument that complexity’s increase is not an accidental byproduct confined to biology, but rather a fundamental, unifying process in nature – one that complements the increase of entropy and might guide us toward new principles in science.

Literature Review

Complexity in Physical and Biological Systems

Evolution of Complexity in Cosmology:

From a cosmological perspective, the universe’s history can be seen as a series of emergent complex structures. After the Big Bang, the nearly uniform plasma gave way to atoms (simple structures), which then gravitated into stars and galaxies, enabling nucleosynthesis of heavier elements. Those elements formed planets and, on at least one planet, combined into self-replicating molecules and living cells. Astrophysicist Eric Chaisson has quantitatively argued that complexity has risen over cosmic time by analyzing the energy flow through systems. He introduced a metric called free energy rate density (energy flow per unit mass), finding that this metric correlates with intuitive complexity: galaxies have higher energy rate densities than diffuse gas, stars higher than galaxies, planets higher than stars, living cells higher than planets, and human brains higher than simple cells. Chaisson’s data, plotted over ~14 billion years of cosmic evolution, show an overall exponential increase in this complexity metric from the Big Bang to the present. While entropy of the universe as a whole increases, these results suggest that pockets of the universe (open systems far from equilibrium) can and do become more complex by utilizing energy gradients. In Chaisson’s words, “energy flow as a universal process helps suppress entropy within increasingly ordered, localized systems…in full accordance with the second law of thermodynamics” – no known physical laws are violated by local increases in order, because greater disorder (heat) is dumped into the environment.

Evolution of Complexity in Biology:

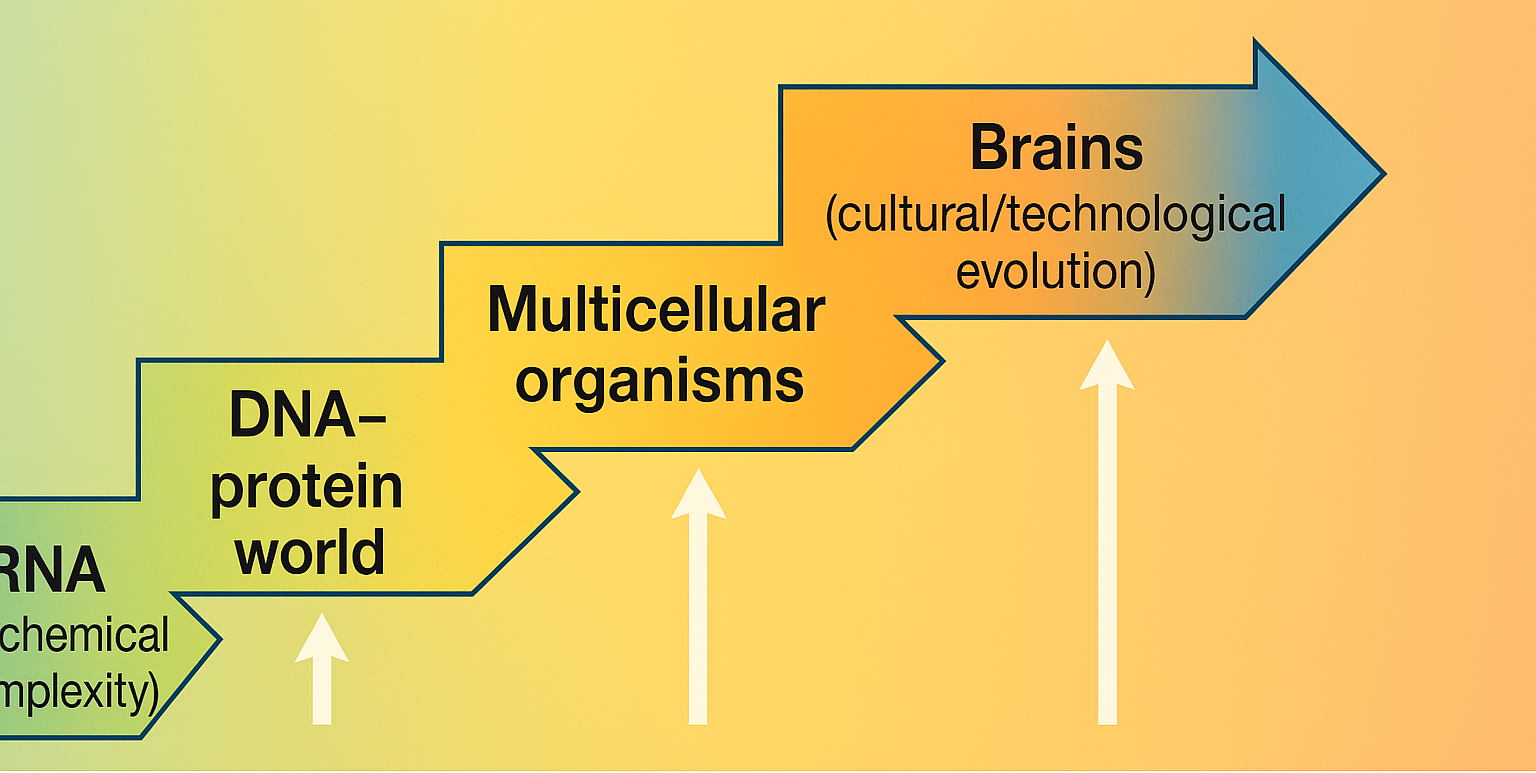

Biological evolution provides the most well-studied example of increasing complexity. Over Earth’s history, life has diversified from relatively simple single-celled organisms to extremely complex multicellular organisms and ecosystems. Theoretical biologists John Maynard Smith and Eörs Szathmáry famously catalogued the Major Transitions in Evolution – landmark events such as the origin of replicating molecules, the emergence of chromosomes, the origin of the genetic code, the evolution of eukaryotic cells (with organelles), the evolution of multicellularity, the origin of societies, and language. Each transition represented smaller units coming together to form larger, more complex units with new types of information flow (e.g., from single genes to chromosomes, or from single-celled organisms to multicellular ones). These transitions illustrate how complexity sometimes increased in jumps rather than a smooth continuum. They also show that what counts as a unit of selection can change – for instance, once independent cells become integrated into a multicellular organism, the “organism” becomes the new unit of selection, leading to a new level of complexity.

Debate exists in evolutionary theory about whether complexity’s increase is an inherent trend or a passive result of random variation. Stephen Jay Gould argued that the appearance of a trend toward complexity can arise simply because there is a lower bound on simplicity (you can’t get much simpler than a bacterium), so over time variance will spread out and some lineages will become more complex even without any directional pressure (“drunkard’s walk” model). In contrast, Daniel McShea and Robert Brandon proposed a “Biology’s First Law” – that in the absence of constraints, complexity (measured as differentiation of parts) will tend to increase spontaneously (a passive trend). Empirical work by McShea and others found that, indeed, over geological time the maximum complexity of organisms tends to increase, although the mode or average might not (many organisms remain simple). Thus, there is evidence for an overall envelope of increasing maximal complexity, even if not all lineages become more complex.

Quantifying Complexity:

A challenge in these discussions is defining and quantifying “complexity.” Different metrics exist, each capturing different intuitions:

- Shannon entropy measures information as uncertainty or disorder, but a high-entropy string (random noise) is typically not what we mean by complex organization.

- Algorithmic (Kolmogorov) complexity measures the length of the shortest program to produce an object. Random noise has high Kolmogorov complexity (no short description), whereas a crystal’s repeating pattern has low complexity. However, algorithmic complexity alone doesn’t distinguish meaningful organization from randomness.

- Effective complexity (proposed by Murray Gell-Mann) attempts to measure the length of a description of the regularities of an object (ignoring random noise) – in theory capturing the structured, non-random aspect that makes something like a living cell more complex than dust.

- Physical complexity (proposed by Seth Lloyd and others) might measure the amount of information a system stores about its environment or past (e.g., how much data is embodied in an organism’s structure).

- Functional information is a particularly useful concept for evolving systems: it measures the amount of information required for a system to achieve a specific function above a given performance threshold. Developed by Robert Hazen and colleagues, this metric explicitly ties complexity to function. For example, among all possible random polymer sequences, very few will fold into a protein that performs a specific job (catalyzing a reaction). Those sequences that do have high functional information relative to that function because a lot of information is needed to specify them out of the combinatorial universe of possibilities. Functional information is measured in bits as I(Ex)=−log2[F(Ex)]I(E_x) = -\log_2 [F(E_x) ], where F(Ex)F(E_x) is the fraction of configurations that achieve at least a given level of funct (Why Everything in the Universe Turns More Complex | Quanta Magazine)L31-L40】. The more demanding the function (higher ExE_x), the smaller F(Ex)F(E_x) is, and thus the higher the information required. This concept will be central to our analysis, as it provides a way to quantify complexity in both living and nonliving systems in terms of what they do (their function) rather than just their microscopic description.

Nonliving Complex Systems and Self-Organization:

Outside of biology, researchers in complexity science have noted many cases of spontaneous ordering in physical and chemical systems. Dissipative structures, a term from Ilya Prigogine, refer to ordered patterns that emerge in systems driven far from equilibrium by energy flow – classic examples include convection cells (Bénard cells) in a heated fluid and the beautiful regularity of a chemical oscillation (Belousov-Zhabotinsky reaction). These structures are temporary and local, but they show that increase in order can naturally occur in nonliving systems given a constant throughput of energy. Similarly, in chemistry, we see self-assembly processes: molecules can spontaneously form organized structures (like lipid membranes or crystals) under the right conditions. While these examples are not increasing complexity indefinitely, they hint at how natural processes can create pockets of order.

One interesting example at a geological scale is mineral evolution. Hazen and collaborators documented that the diversity of minerals on Earth increased dramatically over geological time: early Earth had maybe a couple of dozen types of minerals, whereas today thousands are known. This increase was driven by chemical and environmental changes (e.g., oxygenation enabling new oxides, biological activity creating biominerals). It was not random: each stage of mineral diversification built upon the previous, as new minerals formed in new environments (water-mediated weathering, high-pressure mantle environments, etc.). One can view this as a non-biological complexity increase (in terms of information needed to specify mineral structures, or simply count of distinct solids) driven by planetary evolution. Mineral evolution has even been framed in selecti (On the roles of function and selection in evolving systems | PNAS)inerals that are stable under Earth’s surface conditions “survive” while others transform, implying an analog of selection for persistence of certain crystal structures. Although minerals do not reproduce, the concept of stability under environmental pressures plays a similar filtering role.

Universality of Evolutionary Principles:

Several thinkers have speculated that Darwinian principles might extend beyond biology. The idea of “Universal Darwinism,” introduced by Richard Dawkins and further developed by others like Donald Campbell, suggests that whenever you have systems that replicate with variation and differential success, you will get an evolutionary process leading to adaptation. This has been invoked in contexts such as the immune system (clonal selection of antibodies), cultural evolution (memes), and even cosmic evolution. For instance, physicist Lee Smolin proposed a controversial idea of cosmological natural selection – universes might reproduce via black holes, with slightly different physical constants, and those universes that make more black holes “reproduce” more, suggesting a selection at the multiverse level. While highly speculative, it shows the temptation to see analogies between biological evolution and other domains.

What is emerging from all these studies is a picture that complexity can increase under the right conditions in many systems, and that some general principles – especially the combination of generation of diversity and some kind of selection mechanism – may underlie this increase. However, until recently, no one had formulated a concise “law” for this, analogous to the laws of motion or thermodynamics. Most scientists considered the rise of complexity as a result of many contingent processes rather than a fundamental principle. This thesis, as previewed above, examines a bold proposal that a law-like statement might indeed capture the essence of evolving complexity across biology and physics: the law of increasing functional information. Before developing that argument, we will establish the theoretical framework needed to articulate it clearly.

Complexity, Entropy, and the Arrow of Time

A crucial part of the background is understanding how a directional increase in complexity can coexist with, and indeed be facilitated by, the Second Law of Thermodynamics – the traditional “arrow of time.” The Second Law states that in an isolated (closed) system, entropy (disorder) never decreases; in fact, it increases in any spontaneous process. This law underpins why certain processes are irreversible (e.g. you can’t unmix milk from coffee or cause heat to flow from cold to hot spontaneously). At first glance, the Second Law seems to imply that order and complexity should give way to disorder over time, not increase. How then can galaxies, organisms, and minds arise?

The resolution lies in the fact that Earth, and other systems where complexity grows, are not isolated systems. They are open systems that exchange energy and matter with their environment. Earth receives low-entropy (high-quality) energy from the sun (mostly visible light) and radiates away higher-entropy (lower temperature) energy to space (infrared). This flow essentially allows Earth to export entropy to space, while locally building up order. As physicist Erwin Schrödinger noted in What is Life? (1944), organisms “feed on negative entropy” – they maintain and increase their internal order by exporting entropy to their environment. In thermodynamic terms, the entropy decrease associated with forming a complex structure is offset by a larger entropy increase in the surroundings (often as waste heat). Thus, there is no conflict with the Second Law; rather, the Second Law drives a lot of these processes: systems tend to evolve to dissipate energy gradients, and in doing so they often form complex structures (a concept related to the idea of maximum entropy production principle).

Some researchers have posited that this tendency to form dissipative structures could itself be viewed as a law-like behavior. For example, astrophysicist Chaisson (cited above) argued that the increase in complexity is natural in an expanding, energy-diverse universe. Lineweaver and Egan (2008) explored how life and complexity are consistent with thermodynamics, even suggesting that gravitational clumping (which creates stars and planets – low entropy configurations locally) is an entropy-increasing process overall when you include the generated heat. They phrased it as: entropy (disorder) can increase globally while complexity increases locally, thanks to energy flows.

Philosopher-scientist Sir Julian Huxley in the mid-20th century and Jesuit paleontologist Pierre Teilhard de Chardin earlier had envisioned an evolutionary cosmology where complexity (and consciousness) increase in a quasi-teleological way. Teilhard even suggested a “Law of Complexity-Consciousness” in which increasing material complexity eventually produces higher levels of consciousness – though his ideas were more mystical and not framed in testable scientific terms. Those early ideas set a stage for thinking big about complexity, but modern science requires empirical measures and mechanisms, which is what this thesis emphasizes.

In summary, the literature indicates that:

- The universe’s history provides numerous examples of complexity emerging and growing.

- There is no consensus “law” of complexity, but multiple lines of evidence and theory point toward common principles (like energy flows and selection processes) enabling complexity to rise.

- Any such increase does not violate thermodynamics; rather it relies on thermodynamics in open systems.

- Major transitions in evolution mark significant jumps in complexity, often associated with innovation in information storage/transmission.

- A quantitative handle on complexity that spans physics and biology has been elusive, but concepts like functional information and assembly theory (discussed later) are promising bridges.

These points set the stage for our theoretical framework: we need to formalize what we mean by complexity increase, identify the mechanisms (with selection as a prime suspect), and ensure consistency with fundamental physics. The next chapter develops this framework.

Theoretical Framework

In this chapter, we establish definitions and concepts that will be used throughout our analysis. We clarify what is meant by complexity, functional information, and selection, and describe how these concepts can apply across different domains (physical, chemical, biological, cultural). We also outline the notion of an evolutionary process in a general sense, and how it might generate increasing complexity. Finally, we distinguish complexity from entropy and articulate the conditions under which complexity can increase (open systems driven far from equilibrium).

Defining Complexity and Functional Information

Complexity:

We define complexity, in a general sense, as the degree of structured heterogeneity in a system – that is, how intricate and non-random the arrangement of a system’s parts is. This definition is intentionally broad, as complexity can manifest in many ways (the intricate folding of a protein, the wiring diagram of a brain, the hierarchical structure of a galaxy cluster, etc.). We refine this broad notion by focusing on functional complexity: the complexity relevant to a system’s function or behavior. A random pile of rubble may be geometrically complex, but if it accomplishes nothing, one could argue it’s “organized complexity” that matters more for our purposes (as in Herbert Simon’s definition of complexity relating to how components are organized for some purpose).

Functional Information (FI):

Proposed by Hazen et al. (2007), functional information is a quantitative measure tailored to the idea of function or performance. For a given system and a specified function (with a performance threshold), FI measures how improbable it is to achieve that function by chance. Formally, if NtotalN_{total} is the total number of possible configurations of the system (e.g., all possible sequences of amino acids of a certain length), and N(Ex)N(E_x) is the number of configurations that achieve at least a level ExE_x of performance at the function, then F(Ex)=N(Ex)/NtotalF(E_x) = N(E_x)/N_{total} is the fraction of configurations that meet or exceed that function level. The functional information is defined as I(Ex)=−log2[F(Ex)]I(E_x) = -\log_2 [F(E_x)]. If only 1 in a million configurations can do the function, II is about 20 bits (since 220≈1062^{20} \approx 10^6). If 1 in 2 can do it (a trivial function many configurations satisfy), II is 1 bit. FI is thus high for systems that are highly specified to achieve a function.

A crucial aspect of FI is that it is context-dependent and function-dependent. The same physical configuration can have high FI with respect to one function and zero with respect to another. For example, a hammer has high FI for pounding nails (few random objects can do that well), but near zero FI for, say, absorbing water (many materials can absorb water, a hammer cannot – but we wouldn’t consider that its function). Hazen et al. emphasize that FI must be defined relative to a chosen function and environment, but that doesn’t make it subjective – one can objectively state the function and measure performance. The maximum FI of a system is achieved when only a single configuration accomplishes the function to the highest degree, essentially encoding all bits of the configuration as functional requirements. The minimum FI is zero, when a function is so easy that even random configurations can do it (or no configuration can do it at all).

Why use functional information? Because it directly ties the idea of complexity to something that matters for the system’s propagation or stability. Biological evolution cares about functional complexity (e.g., a slight change in DNA that improves an enzyme’s function can be selected for). In nonliving systems, we can analogously think in terms of function: for instance, in a star, one might say the “function” is fusing hydrogen (or simply persisting in hydrostatic equilibrium); only certain configurations of mass/pressure/temperature profile achieve that stable star state – we could talk about FI associated with stellar stability. In mineral evolution, the “function” might be being a thermodynamically stable mineral under certain conditions. Hazen’s concept essentially allows us to quantify how special or rare a configuration is given a functional criterion, which correlates with what we intuitively call complexity or specificity.

Throughout this thesis, when we speak of complexity increasing, we often mean that the functional information content of systems is increasing. For example, the first replicating RNA molecule on Earth had some functional information (relative to the function of self-replication), but today’s DNA-polymerase-and-ribosome-based replication system has astronomically more FI with respect to precise self-reproduction and metabolism. That increase in FI corresponds to a huge increase in complexity required for life’s functions.

Generalized Selection and Evolutionary Processes

Selection:

In biology, natural selection is the differential survival and reproduction of entities (organisms or genes) based on her (On the roles of function and selection in evolving systems | PNAS)ion in traits. A key insight of this thesis is that selection in a broader sense can occur in systems that are not strictly biological reproduction. We define selection in a generalized way: any process by which some configurations of a system preferentially persist or proliferate over others, due to some criterion (which we can call a function or fitness). Selection requires a mechanism to generate or present variation (different configurations) and a mechanism to choose among them based on performance at some function (which could be as simple as stability under given conditions).

Hazen et al. (2023) identify three universal concepts of selection applicable to various systems:

- Static persistence: some configurations just last longer (are more stable) than others. (In a chemical system, a more stable molecular arrangement might persist while others break down.)

- Dynamic persistence: some configurations are generated or replenished at higher rates. (In an ecosystem, rabbits reproduce faster than elephants, for example.)

- Novelty generation: processes that create new configurations (mutations, innovations, recombination).

Together, these ensure that out of a huge space of possibilities, some subset comes to dominate the population of configurations as time goes on. Whether that population is “molecules in a test tube,” “mineral species on Earth,” or “organisms in an ecosystem,” the idea is similar.

Hazen and colleagues have boldly proposed a “Law of Increasing Functional Information”: The functional information of a system will increase over time (i.e., the system will evolve) if many different configurations of the system undergo selection for one or more functions. This law is meant to parallel other natural laws by identifying a parameter (functional information) that universally tends to increase under certain conditions. Notably, this law doesn’t say complexity must always increase; it gives a condition – presence of variation and selection for function – under which complexity will increase. It’s essentially generalizing Darwin’s insight: selection inexorably accumulates adaptations (which are functional information).

For this law to hold outside biology, we need to recognize selection-like dynamics in other realms:

- In stars: Among many possible dispersal of matter, only certain configurations form long-lived stars. One can say gravity “selects” clumps of matter that are able to initiate fusion and withstand pressure. Those configurations persist (stars shine for billions of years), while others (random gas dispersions) do not form bound structures and thus “fade away.” Over cosmic time, matter has been increasingly found in forms that are locally more complex (stars, planets) because simpler distributions (homogeneous gas) are unstable and collapse – a kind of selection for gravitationally bound states.

- In chemistry: If we start with simple molecules and provide energy, we might get a host of products, but the ones that accumulate are those that are stable (static persistence) or those autocatalytically produced (dynamic persistence). This is chemical selection. Over time, more complex molecules that are stable in the environment can build up (like a network of organic reactions on the early Earth leading to the first replicators).

- In technology or culture: Ideas, inventions, and designs undergo selection in human society – useful ones spread, useless ones are discarded. This has led to increasing complexity in culture (compare technology today vs. the Stone Age). Although guided by intelligence, the cumulative evolution of technology has parallels with biological evolution (variation generated by inventors, selection by markets or utility, retention of improvements).

*Arrow of Time (On the roles of function and selection in evolving systems | PNAS) (On the roles of function and selection in evolving systems | PNAS) Selection processes impart an arrow of time because they are inherently historical and path-dependent. Once a particular complex configuration is reached (e.g., a DNA-protein world in biology), the system’s future possibilities are constrained and enabled by that history. This is a (On the roles of function and selection in evolving systems | PNAS) (On the roles of function and selection in evolving systems | PNAS)lution is a history-dependent process – you can’t generally reverse it and you can’t skip intermediate steps easily. The configurations that exist today (like modern cells) carry a record of past selections (Why Everything in the Universe Turns More Complex | Quanta Magazine) (Why Everything in the Universe Turns More Complex | Quanta Magazine)his irreversibility is analogous to thermodynamic irreversibility. In fact, one could say there are twin arrows: entropy’s arrow (increasing entropy) and complexity’s arrow (increasing functional information). They are not contradictory but complementary: entropy’s arrow provides the overall direction and energy dissipation required, while complexity’s arrow traces the specific pathways where entropy production has been harnesse (Microsoft Word - Georgiev ECCS14 7.23.15 rev2 For Arxiv) ()32-L339】.

Emergence, Context, and Levels of Organization

*Emergent Rules: Complexity increases to a new level, we often get new “rules” or principles that govern that level. This is the concept of emergence, famously captured by Nobel physicist P. W. Anderson’s phrase “More is Different”. For example, when individual neurons form a network, new phenomena emerge (like memory, computation) that are not properties of single neurons in isolation. The emergent laws (e.g., psychology or network dynamics) don’t contradict physical laws but are additional, higher-level descriptions. In our context, as complexity grows, the context in which parts operate changes. The same molecule might behave differently within a complex cell than in a dilute solution because the cell provides scaffolding, energy fluxes, and other interactions – effectively a new context that can give the molecule a function. Thus, function is context-dependent: what counts as useful or complex is defined with respect to the environment and system.

This means our measure of complexity (functional information) can itself evolve as context changes. Early in evolution, a simple repl (The Major Transitions in Evolution - Wikipedia)function might just be to make more of itself. Later, in a cell, that RNA might become part of a ribosome and its function is in translation. Its complexity in the new context is judged by new criteria. Therefore, one must keep track of the evolving definition of function. We will see in the core arguments how context shifts (e.g., new levels of (No entailing laws but enablement in the evolution of the biosphere')ike multicellular life or technological ecosystems) alter the landscape of complexity.

Open-Ended Evolution and Incompleteness:

A system exhibits open-ended evolution if it can keep producing novel forms or functions without a known limit. Life on Earth appears to be open-ended in this sense – new species, new biochemicals, new behaviors continue to arise. In contrast, something like a crystal-forming system reaches a limit (once the crystal is formed, (Study: "Assembly Theory" unifies physics and biology to explain evolution and complexity | Santa Fe Institute) (Assembly theory explains and quantifies selection and evolution | Nature)es). One intriguing framework to understand this concept is in terms of possibility spaces. As complexity increases, the space of achievable configurations often expands, because new combinations become reachable. Stuart Kauffman introduced the idea of the adjacent possible – at any state, there is a set of novel states one step away that were not previously accessible; as you move into one of those, new adjacent possibles appear, expanding the frontier. This leads to a combinatorial explosion of what can happen, given enough diversity and time.

Mathematically, one can’t necessarily pre-compute all possible emergent features because they depend on context and higher-order interactions. This resonates with Gödel’s incompleteness in a metaphorical way: G (Consciousness, complexity, and evolution - PubMed)at any formal axiomatic system capable of arithmetic is incomplete – there are true statements it cannot prove. By analogy, any fixed description of an evolving system will eventually be “incomplete” to describe new patterns that emerge. No finite set of rules can entail all future innovations of an open-ended evolving system. Kauffman and others have argued that there are “no entailing laws” fully dictating the evolution of the biosphere; instead, evolution is a process where new possibilities (and new “laws” at higher levels) keep coming into being. This doesn’t violate physical laws; it means that to predict evolution, listing physical laws isn’t enough – you’d need to somehow foresee novel functional (On the roles of function and selection in evolving systems | PNAS)will emerge, which is in principle as h (On the roles of function and selection in evolving systems | PNAS)ematical system trying to produce a stateme (On the roles of function and selection in evolving systems | PNAS) (On the roles of function and selection in evolving systems | PNAS)y.

**Levels of Selection and Indivi (Study: "Assembly Theory" unifies physics and biology to explain evolution and complexity | Santa Fe Institute)complexity grows, often new levels of individuals arise: genes -> cells -> multicellular organisms -> societies, etc. At each level, selection can act (e.g., selection among organisms in a population). For complexity to further increase, cooperation and integration at a lower level must occur so that higher-level entities can emerge (Maynard Smith and Szathmáry emphasized this). There is often a tension between levels – e.g., a cell in a multicellular body could “rebel” (cancer) which is a breakdown of integration. So, a system must evolve governance mechanisms to maintain the new higher level. This is part of the evolving context: what was a freely reproducing cell becomes constrained as part of an organism. But the organism can do things (functions) that single cells cannot, thus unlocking new complexity (like having organs, nervous systems, etc.).

A Possible New Law of Nature?

We now formalize the conjecture mentioned: Could there be a law of nature governing the increase of complexity, analogous in stature to other laws (while, of course, consistent with them)? Hazen, Walker, and colleagues (PNAS 2023) suggest precisely this: If a system has the capacity to explore many configurations and there is selection for function, then functional information tends to increase. In formulaic terms, d(FI)/dt > 0 under those conditions. They compare it to how the Second Law is statistical (entropy tends to increase in closed systems) – here it’s an evolutionary law (functional complexity tends to increase in open, selected systems).

It’s important to test this idea against known examples. Does it hold that functional information increases? In evolutionary experiments, yes – for instance, experiments with digital organisms or RNA molecules show that with mutation and selection, functional efficacy improves over generations, corresponding to higher FI to meet that function. Hazen et al. performed simulations where simple algorithms mutated and competed, and indeed the functional information (relative to a computational task) increased spontaneously over time. In chemistry, one could imagine an experiment where a mixture of polymers is subject to some selection (say binding to a target); over cycles of selection and reproduction (like SELEX experiments for aptamers), the information content of the polymers binding the target increases – this is essentially directed evolution and is routinely observed in the lab. These are evidence that the “law” holds in those scenarios.

One must also consider if there are limits or exceptions. Could FI ever decrease? In principle, if the selection pressures relax or change, complexity might decrease (e.g., parasites often simplify genomically when they start relying on a host – they lose functions that are no longer needed, a kind of reverse complexity). However, the law is stated for when selection for function is present. If the environment no longer selects for a complex function, that function’s associated complexity can dwindle (atrophy of unused traits). So the law would apply chiefly during periods of sustained selection for certain (or new) functions. Additionally, selection can drive simplification if simplicity is favored (some environments favor smaller genomes or fewer parts). Thus, a refined statement might be: functional information of a system tends to increase up to the point that efficiency or adaptation requires simplicity. In practice, evolution often produces a mix of increases and decreases in complexity depending on what is advantageous. But across multiple traits and over long periods, the envelope of what has been achieved keeps expanding.

In summary, our theoretical framework posits that increasing complexity is driven by a combination of:

- Energy flow (to enable local entropy reduction and work against disorder).

- Variation generation (to explore many possible configurations).

- Selection for function (to preferentially retain the more functional/organized configurations, thereby accumulating functional information).

- Emergent stabilization (new structures creating new contexts that allow further complexity to build without falling apart).

- Historical contingency (the path-dependent accumulation, making the process effectively irreversible and open-ended as new possibilities emerge).

With these concepts defined, we can now proceed to the core arguments, examining the pieces of our hypothesis in detail and supporting them with evidence and logical reasoning.

Core Arguments

Functional Information as the Currency of Complexity in Evolution

A central claim of this thesis is that functional information (FI) is the “currency” by which complexity grows in evolving systems. This means that as systems become more complex, what is really increasing is the amount of information specifying configurations that achieve certain functions. In biological terms, evolution encodes information about how to survive and reproduce (functions) in genomes and structures; over time, the information content required for those functions accumulates. In non-biological terms, we can speak of information being embedded in physical structures that allow them to persist or perform certain processes.

FI in Biological Evolution:

Every adaptive trait in an organism (e.g., the camera-eye of vertebrates, the flight mechanism in birds) carries functional information – it is a solution to a problem (seeing, flying) that out of many random configurations, only specific complex arrangements solve. The evolution of such a trait is essentially the increase of FI in the lineage’s genome. Early in eye evolution, perhaps a simple light-sensitive patch was present (low FI for basic light detection). Over time, through selection, more information was added: genes for lenses, for an iris, for image processing, etc. The fully developed eye has much higher FI relative to the function of high-resolution vision than the initial light spot. This increase occurred stepwise with each beneficial mutation adding bits of information (in the sense of reducing the space of possibilities to hone in on ones that work better).

Hazen and Szostak’s work put numbers to this idea using simpler model systems. For example, they considered short protein-like polymers and a target function (binding a particular molecule). If one starts from random sequences (which have low probability of binding well, hence high FI required to achieve strong binding), and then simulate selection and mutation, one observes the average functional information of the population increases generation by generation. In one simulation, random “digits” were evolving to perform arithmetic operations; initially their performance was poor (low FI for that function), but as they evolved, their performance approached theoretical maxima, indicating the system discovered configurations (algorithms) that encode the solution – the FI increased spontaneously over time. These simulations mirror natural evolution in principle.

FI in Chemical Evolution (Origin of Life):

Prior to true reproduction, chemical systems might have undergone a kind of selection. The origin of life can be seen as the gradual buildup of functional information in molecular networks until a tipping point was reached where self-sustaining, self-reproducing systems existed. Hazen (as cited in the Quanta article) argued that life versus nonlife is a continuum, with functional information driving the process from simple to complex. The first replicator (perhaps an RNA molecule capable of making copies of itself) had to be selected from a vast space of random polymers – a dauntingly low probability event unless there were chemical pathways guiding it. Some researchers (e.g., Eigen’s hypothesis of a hypercycle) suggest that partial functionalities (like metabolism, compartmentalization, simple templates) accumulated before full self-replication. In each step, FI increased: e.g., a lipid vesicle that can grow and divide has FI regarding that function of compartmentalization; an enzyme that catalyzes a reaction has FI for that reaction.

As life emerged, functional information became concentrated in polymers (nucleic acids and proteins). An interesting perspective is to consider the total functional information on Earth locked in biology. It has certainly increased from zero before life, to whatever astronomical number of bits is encoded in all the DNA sequences, protein structures, and biochemical networks today. Earth’s biosphere is essentially an FI-rich system, containing solutions to countless functional challenges (from photosynthesis to flight to symbiosis).

FI in Nonliving Complex Systems:

It may seem odd to talk about function for nonliving systems, but recall Hazen’s broad definition: function is just what some selection criterion favors. In stars, the “function” we might consider is something like longevity or energy dissipation. A star configuration that dissipates energy steadily for a long time (through fusion) is “selected” by virtue of persistence. We can imagine measuring the FI of different configurations of matter for the function “remain a star for at least 1 million years”. The Sun’s configuration has high FI for that function – random assemblies of gas do not. How did the Sun (and billions of other stars) come about? Through gravitational collapse, which tried many “configurations” (different fragmentations of a gas cloud), but only certain ones satisfied the criteria to ignite fusion and hold together. In that sense, gravity plus thermodynamics performed a selection of long-lived stars from many initial perturbations. Over cosmic time, more FI is stored in structures like stars and planets compared to the smooth initial state of the universe. Each stable structure (planet, star, crystal) contains information – for instance, the precise positions and bonds of atoms in a crystal lattice encode information about the environment in which it formed.

Another example: planetary atmospheres might evolve complexity. One can think of an atmosphere’s chemical network and possible emergence of far-from-equilibrium states (like Jupiter’s Great Red Spot, a persistent vortex – a structure that serves the “function” of a stable storm). Only certain complex flows produce such giant vortices; they emerge and persist because they are stable configurations that dissipate energy (the gradient between Jupiter’s equator and poles, for example). If we quantitated the complexity of Jupiter’s atmosphere over time, it might have increased as the planet settled into patterns (this is speculative, but illustrates how even non-biological planets can have dynamical structures that contain information).

Cumulative Nature of FI:

One significant aspect of functional information is that it tends to be cumulative: complex systems often retain the simpler functionalities as sub-functions. A multicellular organism still has cells that perform basic metabolism, an advanced technology (like a smartphone) still contains basic electronic components that must function properly. Thus, as complexity grows, it layers new FI on top of existing FI. Evolution doesn’t usually erase the old (unless it becomes a burden); it repurposes and adds onto it. This means the total FI of a system can keep growing. However, it also means systems can accrue “information debris” or vestigial parts that no longer serve a function, which may later be lost (this is one way FI can decrease locally).

To use a metaphor: functional information is like knowledge gained by a learning process (here, evolution is the learning algorithm). Just as a human technical knowledge base increases over history (we rarely lose fundamental knowledge, we only add more), the biosphere’s knowledge (DNA) about how to survive in various ways has increased. Occasional loss of information happens (extinctions, simplifying parasites), but the overall pool on the planet has grown (especially with human culture adding another layer of information).

In conclusion of this section, functional information provides a robust way to discuss complexity increase quantitatively. It grounds the abstract idea of “order” in concrete, measurable terms of function and probability. This concept will be used in subsequent sections to argue for a universal selection-driven increase in complexity.

Selection Processes Beyond Biology: A Universal Darwinism

One of the bold assertions we explore is that the Darwinian mechanism of variation and selection is not exclusive to life, but a general algorithm that can occur in many systems, thereby driving complexity outside the biological realm. This idea, sometimes called “universal Darwinism,” extends the principles of evolution to phenomena like chemistry, astrophysics, and even information systems.

Darwinian Algorithm in Abstract:

The core of Darwinian evolution is often summarized as: Replication, Variation, Selection. However, even without strict replication, a similar outcome arises if there is repeated trial and error with retention of successes. We can phrase a generalized Darwinian algorithm:

- Generate many configurations (states, patterns) of a system (by whatever process – random fluctuations, exploratory moves, etc.).

- Have a criterion that preferentially keeps some configurations around longer or makes them more likely to be the basis for the next generation of configurations. (This is analogous to selection – the criterion is usually a function or stability measure).

- Repeat, with modifications or combinations of the retained configurations generating new ones.

Biological evolution fits this perfectly: organisms generate offspring with variations; nature selects those better fitted; repeat over generations. Now, consider other domains:

Chemical Selection:

Before life, chemical reactions in a complex mixture can produce a variety of molecules. If some molecule catalyzes its own formation (an autocatalytic molecule) or is especially stable, it will accumulate relative to others. This is a selection effect. Over time, the mixture’s composition shifts towards molecules that are stable or self-promoting – a primitive evolutionary dynamic. Researchers have created experiments with RNA strands in test tubes (like Spiegelman’s RNA replication experiment) that show selection for faster replicating RNA: even outside a cell, if you provide the machinery (a replicase enzyme) and variation (initial random RNA), the RNAs that happen to replicate fastest will dominate. This is evolution in vitro, and it doesn’t require a “living” organism per se. Similarly, in crystal growth, one might say the crystal structure that best fits the conditions nucleates and grows, whereas other arrangements don’t propagate – the environment “selects” a particular crystal form among possible polymorphs.

Mineral and Stellar “Evolution”:

Hazen et al. have likened processes like mineral diversification to evolution. In mineral evolution on Earth, each stage of mineral formation changed the environment in ways that allowed new minerals to form. For example, once photosynthesis put oxygen into the atmosphere, many new oxide minerals appeared (like iron oxides, giving us red rust layers in geological strata). The phrase “selection” can be used here: the changing environment “selects” which minerals are stable. If a mineral forms that is unstable in oxygen, it will be converted into another (so it disappears, akin to being unfit). Only those mineral species that can persist in the new conditions remain. Over time, more and more mineral species accumulated, in part because new ones didn’t replace all old ones but added to the total in new niches (some pockets with no oxygen still held old minerals, etc.). So diversity and complexity of the mineral ensemble increased. This is analogous to an ecosystem diversifying under varied conditions.

In stellar evolution (the term “stellar evolution” in astrophysics refers to a star’s life cycle, but here we mean population of stars), a giant molecular cloud might fragment into many protostars. Not all survive – some may merge or get disrupted. The ones that achieve stable nuclear fusion and balance will shine as stars for a long time (selected for persistence), whereas others might fizzle out as brown dwarfs or get torn apart. Over multiple generations (massive stars explode as supernovae, seeding new clouds with heavy elements, which then form second-generation stars), one could see a form of iteration. Stars don’t replicate in the way organisms do, but there is a population with variation (different masses, compositions) and differential outcomes (some live longer, some shorter, some produce more offspring like planets or new clouds). One might say the universe “selects” for stars that are good at dispersing energy from collapsing gas (since that’s what they do). The concept of cosmic natural selection proposed by Smolin goes further to speculate that universes might breed via black holes, but even if that is far-fetched, within a universe we see repeated cycles of structure formation with a kind of filtering mechanism – call it selection – at work.

Memetic and Cultural Evolution:

Richard Dawkins coined the term meme for a cultural idea or practice that propagates from mind to mind, analogous to a gene. Memes clearly undergo variation (people modify stories, invent new tunes), and selection (catchy or useful ideas spread; others fade away). The evolution of languages, for example, is an evolutionary process – words and grammatical structures mutate over centuries, and those that are easier or more expressive survive in usage. Although driven by conscious agents, the large-scale pattern is unintentional and Darwinian in character. Cultural evolution has led to increasing complexity of technologies and social systems. Simple stone tools evolved into today’s vast technological ecosystems not by genetic evolution, but by a cultural analogue where human choices provide selection pressures (often favoring more complexity because it can achieve more functions).

In technology, there’s even evolutionary computing: algorithms improve solutions by simulating selection (generating many candidate solutions and selecting the best to “reproduce”). These algorithms have produced innovative designs (like antenna shapes evolved by NASA’s software) that human engineers might not have conceived, demonstrating the general power of selection to create complex, functional designs even outside organic life.

Common Principles:

What unites these examples is differential persistence. Selection doesn’t always require literal self-replication; it can work whenever some configurations outlast or outperform others. As Hazen et al. put it, Darwin’s natural selection is just one instance of a “far more general natural process”. They note that some biologists object to using the term “evolution” for things like minerals or stars because of the lack of genetic inheritance. However, if we abstract away the specific mechanisms, the logic of selection applies broadly. Indeed, Hazen’s “law of increasing functional information” was explicitly intended to apply “to both living and nonliving evolving systems”.

One might ask: is there any system where selection wouldn’t eventually produce complexity if given variation and time? If the selection criterion is trivial (or if there’s no criterion at all), then complexity might not increase. For instance, if survival is purely random (no advantage to complexity), then you won’t see a trend – you’d just get drift. Or if the environment is static and favors one simple configuration strongly above all others, the system might evolve to that simple optimum and then stop (no further complexity needed). But in many interesting systems, especially open ones with changing environments or competitive interactions, there is pressure for continued adaptation, which can drive continued complexity.

Static vs. Dynamic Selection:

It’s worth distinguishing static environments (selection for one optimum state) versus dynamic or open-ended environments (where the targets or niches keep changing or expanding). Complexity is more likely to dramatically increase in the latter, because the goalpost moves or new niches appear, requiring additional FI to exploit. Earth’s biosphere has been dynamic – oxygen emergence, climate changes, species co-evolution – all these ensured no single simple configuration could dominate permanently; thus complexity kept ratcheting up in some lineages to meet new challenges or opportunities.

We can conclude that evolution by natural selection is not an anthropomorphic concept but a logical framework applicable to any system with certain properties. It serves as a universal engine of complexity, given it has something to act on. The universality of Darwinism strengthens our hypothesis that complexity increase is a general phenomenon: wherever such an engine operates, complexity measured as functional information will tend to rise.

Complexity as a Directional Arrow of Time

We now address directly the concept of complexity as an arrow of time – the idea that as time progresses, there is a discernible direction in which complexity grows (at least in certain parts of the universe), much like entropy’s inexorable increase provides a time arrow. Is complexity’s increase sufficiently general and persistent that we can treat it as an arrow of time? And if so, what does that imply about the nature of the universe?

Empirical Observations:

From the perspective of 13.8 billion years of cosmic history, one can narrate an “arrow” of increasing complexity:

- Immediately after the Big Bang, the universe was nearly homogeneous plasma.

- By a few hundred thousand years, it was mostly hydrogen and helium gas (atoms formed).

- By a few hundred million years, the first stars and galaxies (structured collections of particles) formed.

- Over billions of years, successive generations of stars created heavier elements, which formed planets and more chemically diverse environments.

- About 4.5 billion years ago, Earth formed; by ~4 billion years ago, chemical processes on Earth led to the first proto-life (simple self-replicating molecules).

- Life then increased in complexity through single-celled to multicellular (by ~600 million years ago complex animals appeared), then through the Cambrian explosion to even more complex forms.

- Intelligence and tool use evolved (our hominid ancestors in the last few million years), culminating in a technological civilization (present day) that introduces new forms of complex structures (cities, computer networks).

This storyline suggests a cumulative trend. Of course, it is biased in that we are focusing on one region (Earth) and one outcome (us) as emblematic. The universe as a whole is mostly still vast space with simpler structures like stars and diffuse gas. But the existence of even one trajectory from Big Bang to sentient life is telling: it shows what is possible under the laws of physics. And if it happened in one place, it might in others, making it part of the universal story rather than a fluke.

There is also a quantitative aspect to this arrow. Eric Chaisson’s plots of free energy rate density, mentioned earlier, show an upward trend when plotted against time of origin. For example, he estimates:

- Early stars: Φ (energy flow density) on the order of 1 erg/s/g.

- Modern sun-like stars: a few erg/s/g.

- Simple plants: tens to hundreds of erg/s/g (they process more energy per mass through metabolism).

- Animal bodies: hundreds to thousands.

- Human brain: ~10510^5 erg/s/g (very high energy throughput relative to its mass). He notes an apparent exponential increase over time, with humans at the top end currently. While energy rate density is just one measure, it correlates with organizational complexity (brains are more complex than stars). Thus, one could say the capacity to process energy/information has increased, marking a direction.

The Role of Entropy Production:

Why does complexity often increase? Non-equilibrium thermodynamics provides an answer: systems will evolve structures that dissipate free energy effectively. This is sometimes called the “MaxEnt Production” conjecture – that systems with many degrees of freedom often find pathways to maximize entropy production. This can drive complexity because complex organized systems can sometimes dissipate energy faster than simple ones. For instance, a forest (complex ecosystem) is very good at capturing sunlight and producing entropy (through respiration, decay, etc.) compared to bare rock. The biosphere increased Earth’s overall entropy production by exploiting sunlight more fully (e.g., formation of soil, etc., that wouldn’t happen on a lifeless planet). Thus, there is a synergistic link: the arrow of entropy (global increase) may channel a local arrow of complexity (complex structures form to aid in entropy production). This idea was championed by Schneider and Kay (1994) who argued that life is a response to energy gradients – basically, life is what happens as an efficient way to degrade the solar gradient.

Irreversibility of Complexity Build-up:

Complexity’s arrow, like entropy’s, has an aspect of irreversibility. Once information is generated, it tends to persist (barring large disturbances). For example, the genetic information in modern organisms traces all the way back – it doesn’t spontaneously revert to a simpler form. The biosphere will not spontaneously go back to just bacteria everywhere; that would require some cataclysm (which is an input of energy or change, not a spontaneous reversal of time). Similarly, the structures in the universe (galaxies, etc.) won’t just smear back into homogeneous gas without some dynamic cause. In this sense, complexity increase where it has occurred has a “memory” – it’s recorded in physical structures and cannot be easily undone. This memory is one reason complexity can keep building (it builds on prior complexity).

Exceptions and Non-Monotonicity:

It must be acknowledged that complexity’s arrow is not as uniform or omnipresent as entropy’s. Entropy increases inexorably in a closed system. Complexity can decrease under some circumstances – e.g., mass extinctions can wipe out complex organisms, leaving simpler survivors. After the End-Permian extinction (~252 million years ago), the number of very complex land vertebrates dropped dramatically; complexity in ecosystems took a hit. However, it recovered and then exceeded previous levels (the Mesozoic had even more varied large animals). So, complexity’s arrow might zigzag – periods of increase, occasional setbacks, but an overall trend upward over the very long term (at least on Earth). Complexity also doesn’t increase everywhere: some environments remain simple (e.g., the deep subsurface of Earth still has only microbial life, which is not much more complex than billions of years ago). The arrow appears when you look at the maximum or the frontier of complexity in the most innovation-rich systems.

It’s valid to ask: is this arrow of complexity an inherent feature of the laws of nature, or a contingent outcome? This is a deep question. If one rewound and replayed the universe, would complexity always emerge and increase? Simon Conway Morris argues convergently that intelligence and complex life might be almost inevitable given enough time, suggesting a built-in arrow. Gould argued it’s highly contingent (replay the “tape of life” and you’d get a different outcome, maybe not intelligence). Even if contingent, as long as the possibility space allows complexity, and there are selection mechanisms, complexity likely will emerge somewhere in the universe’s phase space – which is enough to call it a tendency.

Analogy with Entropy’s Arrow:

We can draw an analogy: Entropy increase is guaranteed by the second law, but it doesn’t guarantee where entropy is produced fastest or what intermediate states appear. Complexity increase is not “guaranteed” by a simple law in the same way, but it appears to be encouraged by the way physical laws permit local entropy reduction via energy flows. We might say complexity has a weak arrow – a general push in one direction – as opposed to a strict law. Part of the aim of this thesis is exploring if we can strengthen that to a law-like statement (with conditions) as Hazen’s team attempted.

In summary, complexity does function as an arrow of time in many contexts. The past was simpler; the present is more complex – at least in our corner of the universe. The key distinction: entropy’s arrow is universal and unavoidable, whereas complexity’s arrow is conditional (it manifests in regions where certain conditions – energy flow, variation, selection – are met). Nonetheless, given that those conditions appear naturally (stars, planets with flux, etc.), complexity’s arrow might be almost as cosmically pervasive as entropy’s, albeit in a patchwork fashion.

A Possible New Law of Thermodynamics? Physical and Philosophical Implications

Is there a new law of nature waiting to be articulated – one that codifies the growth of complexity analogous to how the Second Law codifies entropy growth? If so, what would it look like and what are its implications for physics and philosophy? In this section, we discuss the idea of formulating such a law and the conceptual shifts it might entail.

The “Law of Increasing Functional Information”:

We have already introduced the proposal by Hazen, Walker, et al.: “The functional information of a system will increase if many different configurations of the system undergo selection for one or more functions”. Let’s unpack this. It is structured similarly to the Second Law: the Second Law says “entropy of a closed system stays constant or increases.” The proposed law says “functional information of a (open, varying, selected) system stays constant or increases (and practically increases if selection is ongoing).” It’s not as succinct as “entropy increases,” but then, entropy’s law comes with qualifiers (isolated system). Here the qualifiers are important: you need (a) many configurations tried, and (b) selection for function. Those imply an open system with some mechanism of variation and some criterion – in other words, an evolving system.

One implication of articulating this as a law is to elevate the status of evolutionary processes to something fundamental in nature. It suggests that the emergence of complexity is not just a random fluke on Earth but a lawful process that would happen under suitable conditions anywhere. It is a unifying statement: it puts living and nonliving under one framework. It tells physicists that perhaps their list of laws is incomplete without something that addresses information and evolution.

Philosophically, this is significant because it challenges reductionism. Reductionism holds that all higher-level phenomena (like life, mind, etc.) are explained by known physical laws (quantum physics, etc.). In a reductionist’s perfect world, one wouldn’t need an additional law of complexity; the second law and initial conditions would suffice. However, if one finds that no combination of known laws and reasonable initial conditions can easily explain the emergence of complexity we see, one might suspect a missing principle. It might be akin to how in the 19th century, one could in principle explain a thermodynamic engine by Newton’s laws applied to molecules, but in practice a higher-level law (second law) was formulated to capture an emergent regularity (heat flows one way) without tracking all molecules. Similarly, a law of increasing FI would capture an emergent regularity of complex systems without solving the Schrödinger equation for zillions of particles.

Consistency with Second Law:

Any new law must, of course, not contradict established ones. Hazen’s proposal is explicitly stated to be consistent with the second law. Indeed, it works in concert: selection processes require energy flow (to generate variations, to build structures), which inevitably produce entropy. Our law doesn’t make entropy decrease; it just says in the process of increasing entropy, the system can self-organize to increase functional info. One thought experiment: imagine a universe identical to ours in physical laws except that it somehow lacked the mechanisms for selection (perhaps all processes are either completely random or always go to equilibrium quickly). That universe might still increase entropy but might never build much complexity. The fact our universe does build complexity indicates there is an additional principle at play – selection for function – which our proposed law encapsulates.

A “Fourth Law”?:

If we consider classical thermodynamics, we have four laws (including the zeroth). Some have playfully suggested a “fourth law of thermodynamics” that addresses self-organization. For instance, the Constructal Law (Adrian Bejan, 1997) says that flow systems evolve to maximize flow access (which often leads to branching structures, etc.). It’s an attempt at a general law of design in nature. The law of increasing FI could similarly be seen as a “fourth law” – but it is broader than thermodynamics since it involves information and selection.

One philosophical implication is that information might need to be recognized as a fundamental quantity in physics, akin to energy or entropy. In recent decades, physicists have indeed increasingly viewed information as fundamental (e.g., black hole information paradox, Landauer’s principle relating information erasure to entropy). If a law of increasing FI holds, it means that information (of a certain kind) naturally accumulates in the universe. That might have deep connections to how we understand time and causality. For example, the universe could be seen not just as running toward heat death, but also running toward higher complexity pockets – two arrows intertwined.

Teleology without Teleology:

A new law of complexity might make the universe appear teleological (purpose-driven toward complexity), but it really isn’t teleology in the mystical sense – it’s a natural law, a blind process. However, such a law would formally articulate a kind of direction or “trend” that one could mistake for purpose. Philosophers of science would likely debate whether this imbues the cosmos with a direction akin to purpose or is simply a neutral tendency.

Testability and Falsifiability:

For a law to be scientific, we need ways to test it. How could we test a law of increasing FI? One way is through computer simulations and experiments:

- In artificial life simulations, does functional info reliably increase? (Generally yes, if selection is present, it should.)

- In observing different systems (e.g., comparing planets or environments), do we see that those with more opportunities for selection have higher complexity? For example, Earth vs. Mars – Earth has life, Mars seemingly not; Earth had more dynamic conditions perhaps, or just luck. If we found life on Mars in the past, it would support that wherever possible, complexity arises.

- One could potentially use chemical networks in the lab: set up two scenarios, one where selection is operating (say a recursive selection process like a serial transfer experiment for autocatalysis) and one control where it’s just random, and measure if FI (for some defined function) goes up in one and not the other.

If the law is true, it suggests that whenever we find a system that has undergone many selection iterations, it should have more FI than it started with. If we found contrary evidence (e.g., a long-lived ecosystem that somehow lost complexity without any external disturbance), that would challenge it. So far, life’s history doesn’t give such contrary evidence at the macro scale – complexity has generally risen, though not monotonically.

Philosophical Shift – from Being to Becoming:

Traditional physics is mostly about being – states of systems and timeless laws. An evolutionary law is about becoming – it’s inherently time-oriented and creative. Accepting a law of increasing complexity would be a shift towards seeing the process of the universe as something that can be lawfully characterized, not just the outcomes. It aligns with a process philosophy view (like those of Alfred North Whitehead or Teilhard de Chardin’s ideas, albeit now in scientific garb).

It’s noteworthy that the law of increasing FI is not deterministic in detail; it doesn’t tell you which complexity will arise, just that some will under selection. This introduces a law with an element of probability and contingency, similar to how the second law is statistical (entropy likely increases, though fluctuations can decrease it locally). This might herald a new kind of law in physics that is less rigidly predictive of exact events and more about overarching trends. Some philosophers might argue whether that qualifies as a “law” in the strict sense, but in practice the second law itself is statistical.

Relation to Information Theory and Computation:

Another implication is connecting to computation. The universe producing increasing FI could be seen as the universe performing computation – natural processes computing solutions to constraints (like evolution “computing” an eye design). Some like Seth Lloyd have even said “the universe is a quantum computer” that has been computing its state all along. If complexity growth is lawlike, then maybe the universe inherently computes increasingly complex structures. There’s an analogy to be made with algorithmic information: the output of certain computations increases in algorithmic complexity over time. Perhaps the universe’s time evolution is akin to running an algorithm that generates complexity (except, of course, it’s not aiming for complexity, it’s a side effect of running physics with certain boundary conditions).

Limits of the Law – The Entropic Doom:

One sobering consideration: if the law holds now, will it always? Eventually, as the universe approaches heat death (if indeed it does), free energy sources will wane. Complexity can’t increase without energy flows. So in a cosmological sense, complexity’s arrow might only run during the universe’s middle age, not at the end. In endless expanding universe scenarios, stars burn out, and unless new energy sources (like proton decay or something exotic) feed complexity, it might plateau or decline. So the law might be epoch-dependent. However, that doesn’t invalidate it as a law applying whenever conditions are right, much like life can’t persist without energy but given energy, life emerges.

In conclusion, positing a new law akin to a thermodynamic law for complexity is both exciting and challenging. It forces us to broaden our conception of fundamental principles to include those that talk about evolution and information, not just static quantities. If embraced, it could unify disparate fields under a common principle and guide the search for complexity elsewhere (as we’ll see in astrobiology implications). It also elevates the status of function in scientific discourse – moving it from a concept mostly used in biology into fundamental physics (something even a rock has in context). This bridging of teleonomic language (function, selection) with physics might be one of the biggest philosophical shifts from this line of thought.

Evolving Function and Context: What Counts as “Complex”?

One of the subtler aspects of our hypothesis is that what we consider “complex” is not absolute – it evolves as functions and contexts evolve. In other words, complexity is, to a degree, in the eye of the beholder (or in the “needs” of the system): it depends on what function you care about and the context in which a system operates. As systems become more complex, they also redefine the criteria for complexity at the next level. This recursive aspect is crucial for understanding open-ended evolution.

Context-Dependence of Functional Information:

As described earlier, functional information is defined only with respect to a chosen function. For a single enzyme, FI could be measured for the function “catalyze reaction X”. But that enzyme might also incidentally catalyze a very slow version of reaction Y or bind some other molecule weakly – if we considered those as the function, the FI would be different. Typically, in evolution, the function is dictated by what the environment “rewards.” For early life, a “function” might be simply self-maintenance in a chemical sense. Once life had cells, new functions became relevant: motility, capturing light, etc. The complexity measured relative to those functions then began to increase.

For example, the complexity of a bacterium can be measured by how much information it encodes to survive in its niche (nutrient metabolism pathways, etc.). Now consider a multicellular organism, say a sea sponge. In one sense, a sponge is more complex than a bacterium – it has multiple cell types and a larger genome. But if we asked, “how complex is a sponge’s genome relative to performing basic metabolism?” it might have a lot of extra DNA that isn’t needed for metabolism but is needed for multicellularity. In the context of “metabolism,” the sponge is over-specified; in the context of “forming a multicellular body with some cell differentiation,” the bacterium has essentially zero capability, whereas the sponge has the necessary information.

Thus, new context enables new functions which then define new measures of complexity. When life became multicellular, “organismal complexity” became a thing – and we start counting cell types or tissue specialization as complexity. When brains evolved, “neural complexity” or cognitive function became a new context – a simple brain might be considered functionally simpler in that context than a complex brain, whereas in the context of survival both suffice for their organisms.

In technology, a similar phenomenon: The complexity of a mechanical calculator from the 1950s is high relative to the function “do arithmetic faster than a human.” But in today’s context, that’s trivial – a basic phone chip does that easily; now complexity is judged by functions like “run a global communication network.” So as capability grows, what we label as complex often shifts upward. This can make it tricky to have a single metric over long time spans, but functional information handles this by always tying to a specified function. It means we have to update our perspective as new functions appear.

Exaptation and Change of Function: