Time, Entropy, and Complexity

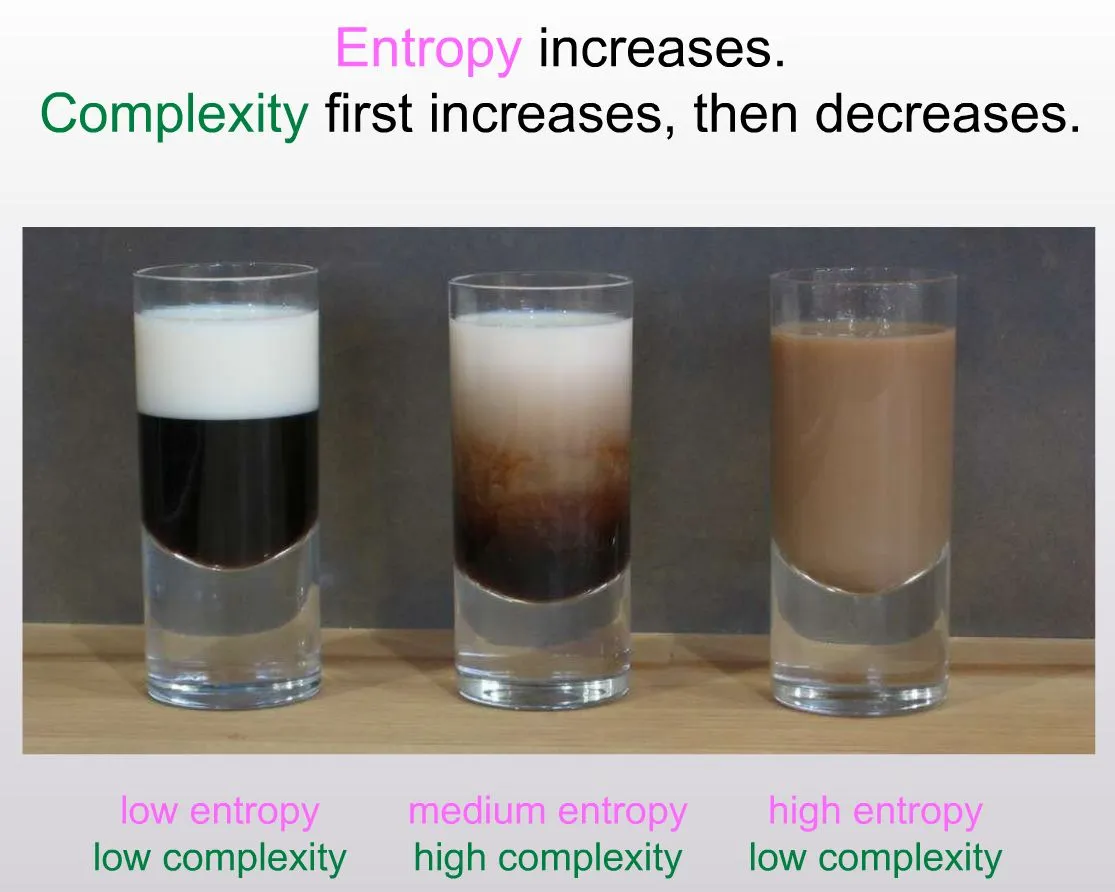

Imagine you have a simple cup of coffee with milk. Initially, you see two neat layers—milk on top, coffee below. Over time, the milk and coffee mix and become one smooth blend. This process is an example of entropy increasing: the system moves from a more ordered arrangement (two clear layers) toward a more disordered one (evenly blended).

What Is Entropy?

In physics, entropy is linked to how many microscopic states (“microstates”) fit the visible, overall state (“macrostate”). When milk is still separate from coffee, there are fewer ways the molecules can be arranged while still looking “layered.” Once they blend, there are many more possible ways the molecules can move around and still look “uniformly mixed.” Because there are more possible “mixed” microstates, entropy goes up when milk and coffee stir together.

Why Does Entropy Always Increase Over Time?

On large scales, when things can shift from fewer possible states to many possible states, they tend to do so. This shift happens all around us: heat spreads in a room, decks of cards get more shuffled, or neat piles of laundry get scattered. It’s easier for a system to move into these “mixed-up” states than it is to stay carefully arranged. Physicists sometimes say this is because there are simply more disordered microstates than ordered ones.

Complexity’s Rise and Fall

Interestingly, the most “complex” patterns often appear halfway through the mixing. If you watch coffee swirl into milk, you’ll see beautiful, elaborate shapes for a moment before it all becomes plain brown. Complexity spikes during these transitions—when there’s enough structure to form interesting patterns, but not so much mixing that everything looks the same. Once the mixture becomes uniform, complexity drops again.

Emergence and Information

The idea of “emergence” is that a system can display new and unexpected behaviors when lots of simple parts interact. Think about a brain: neurons on their own aren’t that interesting, but when billions connect and exchange signals, intelligence can emerge. Similarly, the swirling coffee-and-milk system shows how information about boundaries (milk vs. coffee) can briefly create intricate patterns before everything smooths out.

Putting It All Together

- Time pushes systems from fewer microstates to more microstates.

- Entropy measures how many microstates fit the overall state, and it tends to increase with time.

- Complexity often flares up in the middle, when there’s still enough order to form patterns but enough disorder to allow variety.

From coffee cups to galaxies, this interplay of time, entropy, and complexity shapes the universe. We see transient structures bloom as systems head toward more mixed states, reminding us that nature’s most intriguing patterns can appear—and disappear—during the dance between micro and macro.

To increase intelligence you have to increase entropy but encode the newer undefined structures that have more degrees of freedom and potential for complexity with more complex sensory patterns by inducing correlation with them across various time scales across the whole neural network in as efficient compressed dynamical topologically efficient hetearchical representations as possible

To increase intelligence you have to increase entropy but encode the newer undefined structures that have more degrees of freedom and potential for complexity with more complex sensory patterns by inducing correlation with them across various time scales across the whole neural… pic.twitter.com/hPEChQry8i

— Burny — Effective Omni (@burny_tech) May 15, 2024